Hi, I’m Mana.

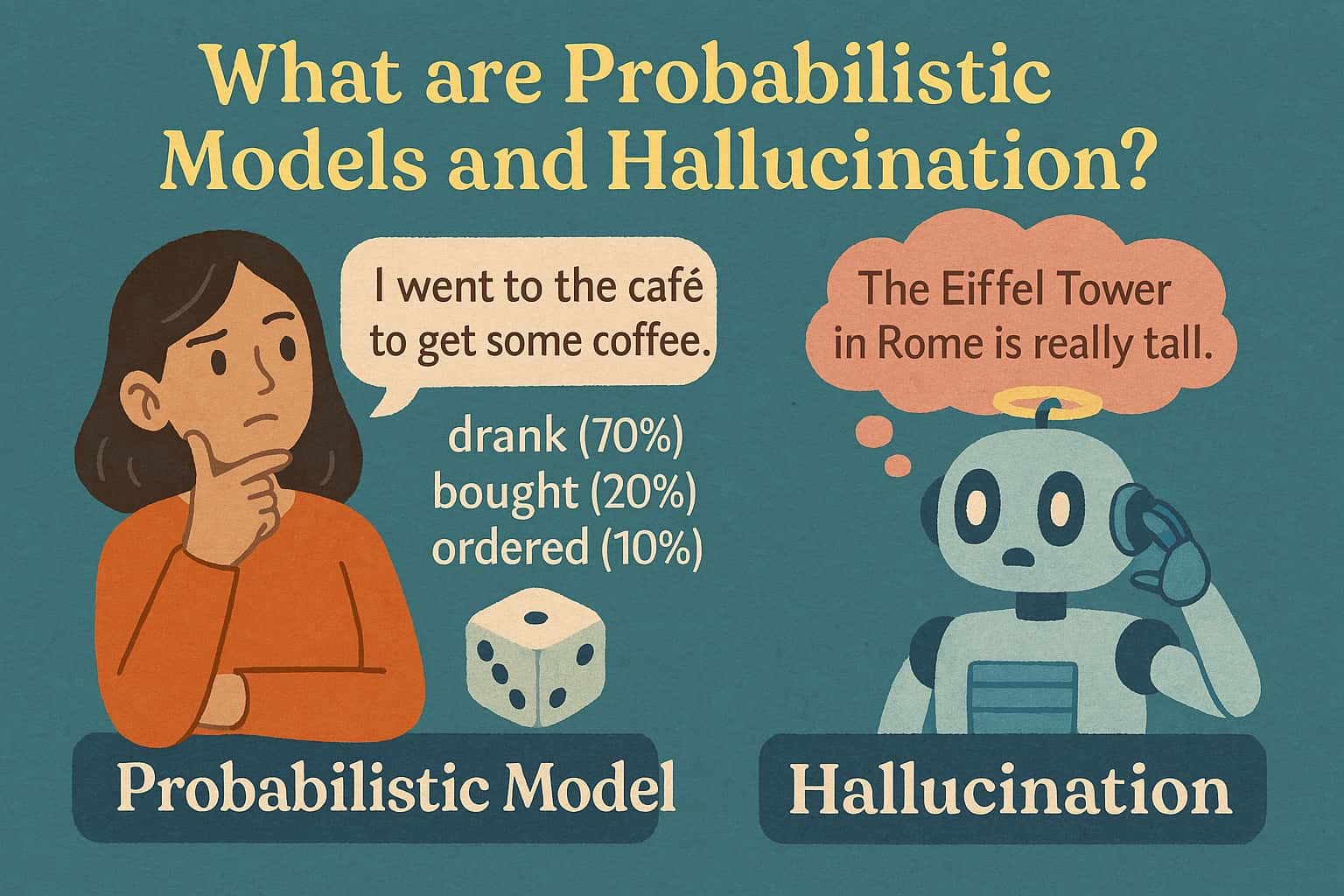

Today I want to explain two important ideas that help us better understand generative AI: probabilistic models and hallucination.

Generative AI can write natural sentences, create images, and even generate speech. But behind the scenes, it’s simply making predictions—choosing the next most likely word or element based on statistics.

🎲 Generative AI Is Built on Probability

Generative AI, especially large language models (LLMs), use probability to decide what to say next.

For example, if the sentence is:

“Today I went to a café and ordered a ___.”

The model might assign probabilities like this:

- “coffee” – 70%

- “tea” – 20%

- “sandwich” – 10%

It will choose one based on those probabilities, then predict the next word, and so on.

It doesn’t actually “understand”—it just predicts what sounds most likely.

🤔 Why Does AI Make Things Up? That’s Called “Hallucination”

Since generative AI isn’t looking up real facts, it sometimes produces information that isn’t true. This behavior is known as hallucination.

Examples of hallucination:

- Referring to books or articles that don’t exist

- Citing fictional statistics or laws

- Inventing fake company names or people

These outputs often sound believable, which makes them easy to trust by mistake.

📌 Why Do Hallucinations Happen?

Here are three common reasons:

- Probabilistic prediction

The model focuses on likely-sounding output, not factual correctness. - Flawed training data

AI learns from web content—which includes both true and false information. - Vague or unclear prompts

If a question is ambiguous, AI may guess something plausible, even if it’s incorrect.

🛡️ How to Handle Hallucinations

Generative AI is useful, but don’t trust its answers blindly. Here are some simple practices:

- ✅ Always verify important facts manually

Especially in areas like business, education, or healthcare. - ✅ Ask for sources in your prompts

Try questions like: “Can you provide a source?” or “What is this based on?” - ✅ Use real-time or search-augmented tools

Tools like RAG (Retrieval-Augmented Generation) can connect to live databases or websites.

📘 Final Takeaways

- Generative AI doesn’t “understand”—it predicts based on probability.

- Hallucinations are normal and need human oversight.

- To use AI responsibly, it’s crucial to understand how it works and its limitations.

Let’s continue learning and using these tools wisely! 📘

Comment