Hello, I’m Mana.

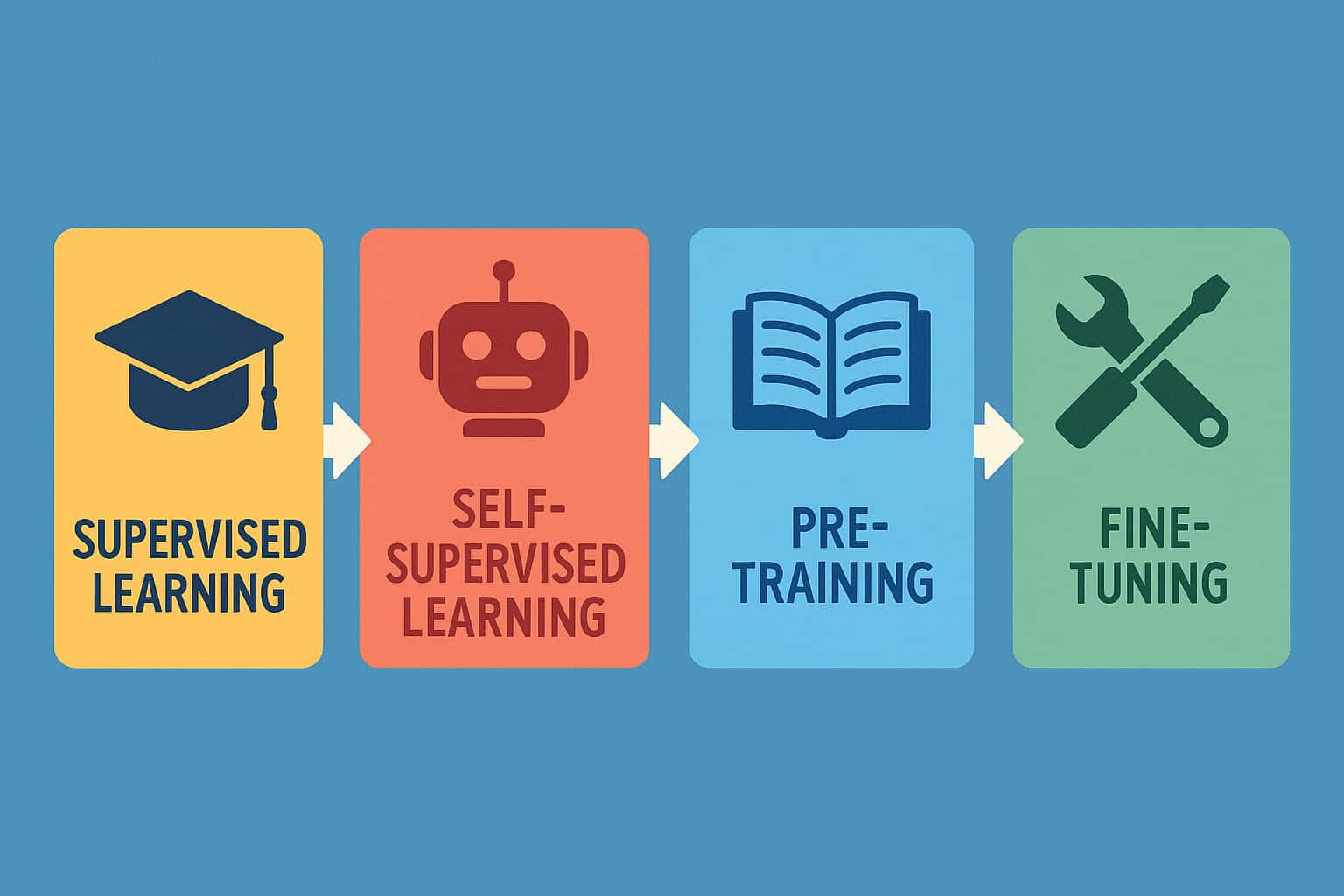

Today, I’d like to walk you through four important keywords that explain how generative AI learns: Supervised Learning, Self-Supervised Learning, Pre-training, and Fine-tuning.

🎓 What is Supervised Learning?

Supervised learning is a method where the AI learns using data that includes the correct answers (labels).

Example:

Input: “Is this email spam?”

Label: “Yes (spam)” or “No (not spam)”

By feeding the AI many examples like this, it learns rules to make decisions.

🔑 Key Points:

- Best suited for tasks with clear answers (like classification or prediction)

- Can create high-accuracy models, but requires a lot of labeled data

🤖 What is Self-Supervised Learning?

Self-supervised learning lets the AI learn by hiding parts of the data and predicting them—no human labeling required.

Example:

“Today I went to the café and drank ____.”

Target: “coffee”

Repeating these tasks at scale helps the AI learn context and meaning in language.

🔑 Key Points:

- Can learn from unlabeled data

- Scales well to large datasets; often used in training large language models (LLMs)

- Commonly used during the pre-training phase

🧠 What is Pre-training?

Pre-training is the first phase where AI learns general knowledge and language patterns from large text datasets.

Think of it as reading an encyclopedia to get a broad understanding of the world.

🔑 Purpose:

- Builds a foundation in grammar and sentence structure

- Not yet focused on specific tasks

- Often uses self-supervised learning

🔧 What is Fine-tuning?

Fine-tuning is the process of taking a pre-trained model and adjusting it for specific tasks.

Examples:

✅ Optimized for chatbots

✅ Specialized in legal or medical fields

✅ Used for summarization, translation, or Q&A

🔑 Method and Characteristics:

- Usually done using supervised learning

- Can improve performance even with small datasets

- Final step to make the model practical and useful

🔄 Summary: How They Connect

First, self-supervised learning is used as a method to conduct pre-training.

During pre-training, the AI builds a foundation model by learning about language and general knowledge.

Then, through fine-tuning, the model is further adjusted to specialize in specific tasks.

Finally, it becomes a practical model that can be utilized in society.

| Method | Labels Used? | Main Use | Training Phase |

|---|---|---|---|

| Supervised Learning | Yes | Fine-tuning | Final stage |

| Self-Supervised Learning | No (pseudo-labels) | Pre-training | Early stage |

| Pre-training | General data | Builds foundation model | Starting point |

| Fine-tuning | Yes | Task-specific adaptation | Polishing step |

📘 Final Thoughts

Generative AI doesn’t become “smart” all at once. Its capabilities are built step by step through these layered learning methods.

By understanding how AI learns, we can better understand its strengths, limitations, and how to use it responsibly.

Let’s keep learning together about the world of AI! 📘

Comment